Introduction to Deep Learning Models

Deep learning models have revolutionized the field of time series forecasting, offering significant performance improvements over traditional methods. These models are particularly well-suited for handling complex temporal relationships and high-dimensional data. In this section, we will introduce the basics of deep learning models and their applications in time series forecasting.

Deep learning models are a type of machine learning model that uses multiple layers of artificial neural networks to learn complex patterns in data. These models are trained using large datasets and can learn to recognize patterns and relationships that are not easily apparent to humans. In the context of time series forecasting, deep learning models can be used to predict future values of a time series based on past values, known future inputs, and other relevant information. By leveraging their ability to learn temporal relationships, these models can provide more accurate and reliable forecasts, making them invaluable tools for decision-makers.

Our data science and analytics teams handle and apply lots of data for insightful decision-making. Last year, I presented the data science team with a challenge: use historical data to predict a key business driver for each of the next 8 periods. We wanted to have a data-driven preview of what we might see in each of the next eight periods so that we could anticipate the actual outcome and make better decisions with an eight-period.

To achieve this, we employed multi-horizon forecasting, which allows us to predict key business drivers over multiple future periods using the Temporal Fusion Transformer (TFT) model.

The data science team went to work researching ways we could do this and tested a few different methodologies. We have lots of input data from our own and public sources to feed any model we wanted to test, which worked well for us. With that said, we had low expectations about finding a predictive model that produced anything reliable.

Testing different algorithms is always our approach. For the semi-technical readers, before settling on Temporal Fusion Transformer (TFT), the algorithms we tested included ARIMA, VAR, GARCH, ARCH models (univariate), Prophet, NHits, and Nbeats. TFT is an attention-based deep learning neural network algorithm. Using a mix of inputs, it produces a forecast over multiple periods in a future time horizon that you can determine. You can predict days, weeks, months quarters (really any interval is possible) into the future. Your choice.

Understanding the Temporal Fusion Transformer

The Temporal Fusion Transformer (TFT) is a type of deep learning model specifically designed for time series forecasting. The TFT model is based on the transformer architecture, a neural network structure well-suited for handling sequential data. By combining self-attention mechanisms and recurrent neural networks, the TFT model excels at learning temporal relationships in data.

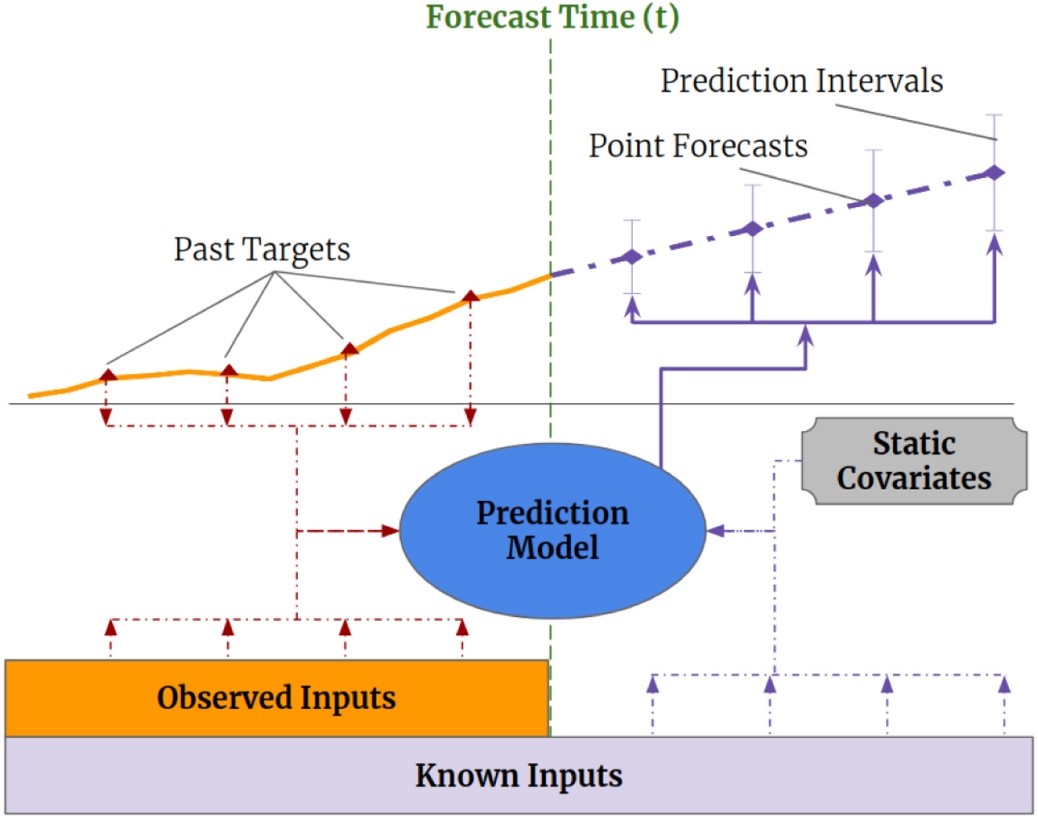

The TFT model is designed to handle multiple types of inputs, including past inputs, future inputs, and static covariates. It employs a gating mechanism to select relevant input variables at each time step, ensuring that only the most pertinent information is used for predictions. This gating mechanism helps suppress unnecessary components, enhancing the model’s performance. The TFT model also utilizes context vectors derived from static covariates to enrich temporal representations. Additionally, the temporal fusion decoder combines information from past and future inputs, enhancing the model’s forecasting capabilities by integrating temporal features. The use of a quantile regression loss function allows the TFT model to generate prediction intervals, providing a range of possible future outcomes and adding a layer of reliability to the forecasts. Context vectors provide additional information that influences the processing of input features, helping the model to adapt and apply non-linear transformations as needed.

The picture below shows the concept of how TFT works.

Source: Bryan Lim, Sercan Ö. Arık, Nicolas Loeff, Tomas Pfister. “Temporal Fusion Transformers for interpretable multi-horizon time series forecasting.” International Journal of Forecasting. Volume 37, Issue 4, October–December 2021, Pages 1748-1764.

Understanding TFT Architecture

Training and Implementing the Model

Training a TFT model involves feeding the model a large dataset of time series data and adjusting the model’s parameters to minimize the loss function. The model can be trained using a variety of optimization algorithms, including stochastic gradient descent and Adam. These algorithms help fine-tune the model to improve its accuracy and performance. By focusing on the most relevant variables and filtering out unnecessary noisy inputs, the model’s learning capacity is enhanced, leading to better predictive accuracy.

Implementing a TFT model in practice involves several steps, including data preparation, model selection, and hyperparameter tuning. Data preparation is crucial, as it ensures that the input data is clean and formatted correctly for the model. Model selection involves choosing the appropriate TFT architecture and settings for the specific forecasting task. Hyperparameter tuning is the process of adjusting the model’s parameters to achieve the best possible performance. The model can be implemented using a variety of programming languages and deep learning frameworks, such as Python and TensorFlow, making it accessible to a wide range of practitioners.

A continuous improvement process best describes how we developed and continue to refine the model. It’s a never ending process of improvement, as a true crystal ball is never achieved.

These are the four stages of development we went through after choosing TFT as our algorithm:

Stage 1a: Selecting all logical observed inputs and test how they drive the model. We started with over 100 and the final model only used 20. Go to Stage 1b as needed.

Stage 1b: Refining the time intervals of the observed inputs. Since the inputs might come in varying time intervals (daily, weekly, monthly and quarterly, etc…), we needed to find methods to standardize them. You should choose an interval that matches the decision-making forecast you are producing, if you can. Go back to Stage 1a as needed.

Stage 2: Model iteration and improvement. Complete back testing. Examine early predictions. Go back to Stages 1a and 1b as needed. At this point, you have probably settled on one or two of the most promising algorithms.

Stage 3: Begin using in production and comparing predictions to the future periods as they unfold. Learn and refine by going back to any previous stage as needed.

Stage 4. Continuous improvement loop. Write long-term road map. Test new inputs as they are presented. Continuous scrutiny of the predictions against what actually happens – learn and make changes by going back to any previous stage as needed.

Note that at any of the stages of development, you can use a TFT encoder decoder to measure the importance of different inputs in the algorithm to learn which ones have the most impact on your prediction.

Below are the results of our model. The orange line is the actual result of the key driver and the blue line is the prediction of the key driver that was made 8 periods ago. The area to the right without the orange line is the next 8-period forecast. So, at Period 19, we can use the blue line forecast to take action based on what Periods 20-27 tell us. When we reach Period 20, we evaluate the updated forecast and we make a decisions accordingly for the future periods. This way, we have a rolling 8-period prediction/decision cycle.

As you can see the model has been refined to a level that it makes useful predictions and handles volatility of the prediction with some reliability. Right now, we don’t use this to predict the future down to the exact number, which would be ideal, but we use it for a directional understanding of where things are headed so we can make better decisions at the current decision point.

Real-World Applications of the TFT Model

The Temporal Fusion Transformer (TFT) model boasts a wide array of real-world applications, demonstrating its versatility and efficacy across various domains.

Demand Forecasting: In the retail sector, the TFT model can predict future product demand, enabling businesses to optimize inventory management and reduce stockouts or overstock situations.

Energy Consumption Forecasting: The TFT model can forecast future energy consumption in buildings, aiding in the optimization of energy usage and the reduction of waste, which is crucial for sustainability efforts.

Traffic Flow Forecasting: By predicting future traffic flow, the TFT model can assist in optimizing traffic light control systems, thereby reducing congestion and improving urban mobility.

Financial Forecasting: In the financial industry, the TFT model can predict future market trends, helping investors make informed decisions and manage risks more effectively.

Healthcare Forecasting: The TFT model can forecast future healthcare trends, such as patient admission rates, which can help healthcare providers optimize resource allocation and improve patient outcomes.

The TFT model is particularly advantageous in scenarios involving multiple input variables, including past inputs, future inputs, and static covariates. Its ability to learn temporal relationships between these variables and make accurate predictions, even amidst noise and uncertainty, sets it apart. Moreover, the model’s interpretability allows users to understand the relationships between input variables and target values, making it a valuable tool for decision-makers across various industries.

Interpreting and Analyzing the Model

Interpreting and analyzing a TFT model involves several steps, including feature importance analysis and attention weight analysis. Feature importance analysis helps identify which input variables have the most significant impact on the model’s predictions. Attention weight analysis, on the other hand, examines the attention weights assigned to each input variable at each time step, providing insights into how the model processes and prioritizes information.

The TFT model also provides a number of tools for interpreting and analyzing the model, including the TFTExplainer. The TFTExplainer is a tool that provides feature importance and attention weights for past and future inputs, and can be used to understand the importance of static covariates. By leveraging these tools, users can gain a deeper understanding of the model’s inner workings and make more informed decisions based on its predictions.

Overall, the temporal fusion transformer model is a powerful tool for time series forecasting that offers significant performance improvements over traditional methods. The model is well-suited for handling complex temporal relationships and high-dimensional data, and can be implemented using a variety of programming languages and deep learning frameworks.

See my blog post on survival regression for predicting consumer credit risk

FAQ

What methodology did the data science team employ to predict key business drivers for future periods using known future inputs, and how did they refine the process?

The data science team tackled the challenge of predicting future business drivers by testing various algorithms, ultimately settling on the Temporal Fusion Transformer (TFT), an attention-based deep learning neural network algorithm. Before arriving at TFT, they experimented with other methods such as ARIMA, VAR, GARCH, ARCH models (univariate), Prophet, NHits, and Nbeats. Following the selection of TFT, the team underwent a continuous improvement process consisting of four stages: selecting observed inputs, refining time intervals, integrating context vectors derived from static metadata to enhance temporal representations, iterating and improving the model, and finally, incorporating it into production and continuously refining based on real-world outcomes.

How does the Temporal Fusion Transformer (TFT) algorithm function, and what role does it play in the predictive process?

Temporal Fusion Transformer (TFT) is a deep learning neural network algorithm designed for multi-horizon time series forecasting. The temporal fusion transformer model, introduced by Google researchers, is a specialized transformer-based neural network tailored for time-series forecasting. It utilizes a mix of inputs to produce forecasts over multiple periods into the future, allowing users to predict days, weeks, months, quarters, or any desired interval. The algorithm’s effectiveness lies in its ability to handle volatility and provide reliable predictions, albeit not down to the exact number. Instead, it offers a directional understanding of future trends, empowering decision-makers to make informed choices at each decision point.

What does the continuous improvement loop entail, and how does the model adapt to changing circumstances?

The continuous improvement loop involves ongoing refinement and enhancement of the predictive model. It begins with selecting logical observed inputs and testing their impact on the model, followed by refining time intervals and iterating to improve the model‘s accuracy through backtesting and early prediction examination. As the model is deployed in production, it is continuously compared to actual outcomes, allowing for further learning and refinement. At any stage, the use of a TFT encoder decoder helps measure the importance of different inputs, enabling the team to prioritize those with the most significant impact on predictions. This iterative process ensures that the model remains adaptive to changing circumstances and evolving data patterns, facilitating better decision-making over time.

About me